Understanding and Leveraging Machine Learning in Conversational AI (using Rasa)

What makes a good conversation? A good conversation is one where you get the person you are speaking with interested in speaking, maybe by asking a few questions, making small talk and the filler conversation.

Consider this conversation:

Me: Hi, I am Gaurav.

John: Hi, I am John.

Me: How are you doing?

John: I am doing good. You?

Me: I am doing good too. So, I hear you are an expert on ML. So, can you tell me what it is?

John: Hmmm, ML stands for Machine Learning. It is the process of, er, a computer’s ability to learn by itself on providing some training data.

Me: Ah! I get it. Sort of like how a kid learns, right?

John: Yes, in it’s simplest form.

Me: And what is DL?

John: Well, DL stands for Deep Learning. It is more like a subset of Machine Learning. It mostly uses algorithms, somewhat based on the working of the brain. You would have heard of Artificial Neural Networks, right?

Me: Oh yes! So, Artificial Neural Networks like Convolutional Neural Networks and Recurrent Networks are part of DL?

John: Yes, indeed.

Me: Now I understand. Thanks for the explanation.

John: Anytime.

There is an interaction, a to and fro between me and John and through this conversation I get an idea of what Machine learning and Deep learning are. There are multiple ways this conversation could have played out and that dynamic interaction is what we are looking to replicate with ML.

Conversational AIs can conduct human-like interactions with humans using Natural Language Processing (NLP). They are evolved versions of the chatbots, that may serves up automated responses or a default fallback response. Conversational AIs are essentially smart chatbots.

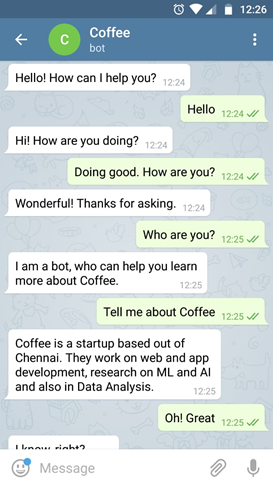

Consider a simple bot that tells you more about Coffee, the startup that I spend most of days at.

On being asked “How are you?”, the bot responds “Wonderful! Thanks for asking.” It even thanks you for asking. There is a lot of to and fro in this conversation and you can go through it in the image. I would like to bring your attention to the last message, “I know, right?” in response to my “Oh! Great.” That sort of dynamic interaction or I would say, more of a reaction to my statement is what makes a bot smart and this is where ML and AI play a major part.

So, lets have a look at what are the options for building such a bot.

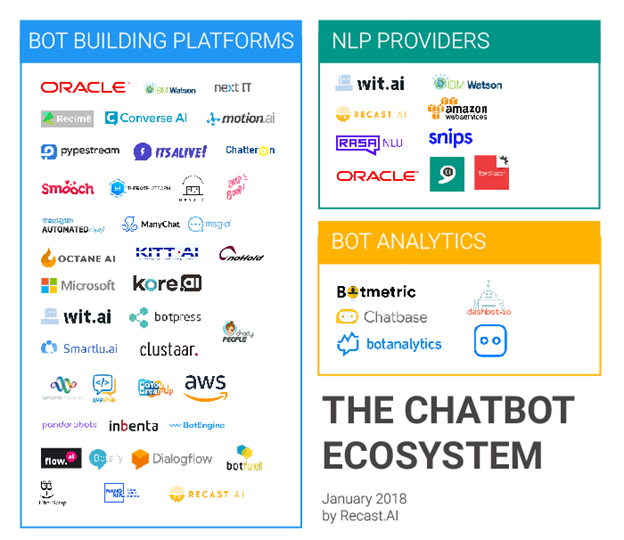

The Chatbot Ecosystem

There are multiple ways of going about building a smart conversational AI. You can leverage bot building platforms such as DialogFlow, IBM Watson, wit.ai, recast.ai, Rasa and more. Bot building platforms allow you to build an end to end conversational NLP with the ability to process and predict an action based on your intent. These platforms also provide options for integrating with a frontend platform of your choice.

Optionally, you can opt in for NLP providers such as Rasa NLU, wit.ai, IBM Watson, AWS and more. These platforms provide you only the Natural Language Processing part upon which you can build your custom processing and ML model to generate a conversational flow.

Flow of Conversational AI

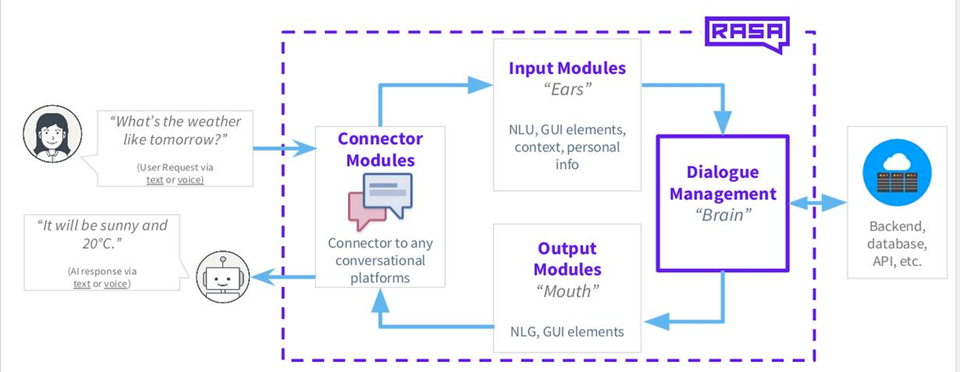

A basic Conversational AI takes in the user request via text or voice, and passes it to the following modules:

- Connector modules:

This is the conversational platform, the frontend, and can be a website or app, where the user interacts with the AI. - Input Modules:

The user request is feed to this module, which does Natural Language Understanding. - Dialogue Management

This module takes the NLU text and maintains the context of the conversation. It then uses Machine Learning model such as an RNN or LSTM to suggest an appropriate action to be taken. This module can also be connected to a backend or database to perform additional processing based on suggested action.

In the below example, it recognizes that the user is requesting the weather and connects to the weather API to perform the appropriate action.

- Output Modules

This module performs Natural Language Generation to return an appropriate response to the user.

The user is sent back a AI response via text or voice through the Connector Module.

Conversational AI with Rasa

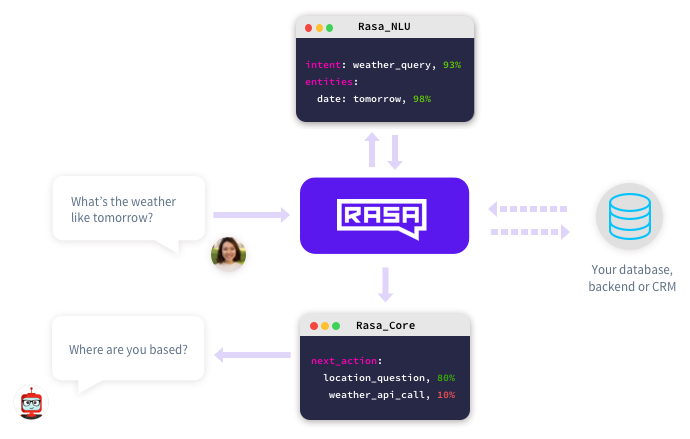

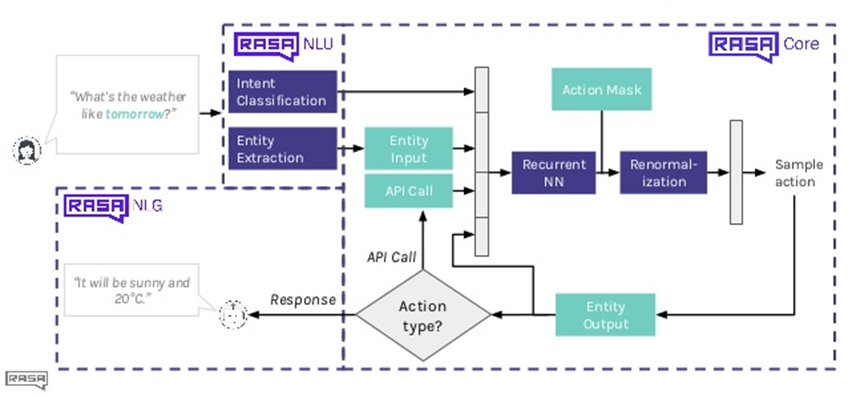

Rasa is an open source bot framework, which can be used to build contextual AI assistants and chatbots. It has the following 2 components:

1. Rasa NLU, which interprets the user message.

2. Rasa Core, which tracks the conversation and decides what happens next. Rasa Core suggests the most appropriate action to be taken

Rasa doesn’t have any pre-built models on a server that you can call using an API. You will need to prepare the training data and load it into Rasa. However, you are in complete control of all the components of your chatbot, which makes it totally worth the time.

Let’s take a closer look at the 2 components of Rasa stack and how they work with NLP and ML.

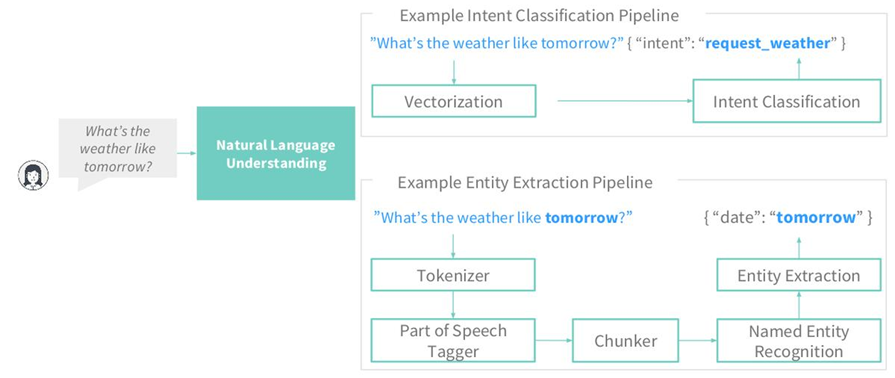

Natural Language Understanding (Rasa NLU):

Rasa NLU processes user input text and understands what the user is trying to say. Basically, it takes the user text as input and extracts the intent and entities from it.

- Intent: An intent represents the purpose of a user’s input, what the user wants to do. The user input text is first vectorized and then the intent of the text is extracted.

- Entity: An entity represents a term or object that is relevant to your intents and that provides a specific context for an intent.

Taking the example of the “What’s the weather like tomorrow?”, we can get the following:

- Intent: “request_weather” =>User is requesting for the weather.

- Entity: { “date”: “tomorrow” } => When is the user requesting the weather for?

You can define additional intents based on your application. For eg., you can also define a “place” entity, so that you can understand which place the weather should be requested from.

For a breakdown and the deeper understanding of the Rasa NLU, you can check out an article I previously wrote on Understanding Rasa NLU.

Dialog Handling (Rasa Core):

Upon extracting the data from user input, the intent and entities are passed to Rasa Core. RasaCore decides what happens next in this conversation. It uses machine learning-based dialogue management to predict the next best action based on the input from NLU, the conversation history and your training data.

Rasa Core uses a neural network implemented in Keras, with the default model architecture based on LSTM to predict the next action.

LSTMs (Long Short Term Memory networks) are a special kind of Recurrent Neural Network (RNN), capable of selectively remembering patterns for long durations of time. LSTMs can thus help in maintaining the context of conversations and also predicting the next action. You can check out this article on the Introduction to LSTM to understand LSTMs further. You can also check out this presentation by Vishal Gupta.

For our example of the weather request, an action of “location_question” or “weather_api_call” is suggested. Finally, based on the action type, the relevant response is generated by the Rasa NLG, which is part of Rasa Core.

This covers the basics of how machine learning is used to build a Conversational AI. Now you will have an understanding of the flow of conversation and how the different components of Rasa come together to perform the required tasks. A major advantage of Rasa is that the components are interchangeable. You can make use of Rasa Core and Rasa NLU separately plugging it into your custom built models.

You can get started with building your first Rasa Conversational AI by checking out this Google colab notebook: https://colab.research.google.com/drive/1pIcv7eEKhNnl2zf4JPl9FvTv1sInlhBT

If you have any comments, I’d be happy to discuss further. Respond here or find me on twitter